Adding a CDN to a load balancer (for a much faster website)

Posted Sep 22, 2023 | 8 min. (1661 words)Here at Raygun, we like to go fast. Really fast. That’s what we do! When we see something that isn’t zooming, we try to figure out how to make it go faster. So today, we’re answering a simple (and relevant) question; how do we make our public site, raygun.com, much, much faster?

The answer, at first glance, is simple—we build it into a Content Delivery Network (CDN). But what if you have a load balancer serving your website, and you don’t want to rebuild everything to serve from a CDN? Well, that’s more complicated.

Let’s start by describing the issue.

Our website is complex. Like many other websites and teams, we collaborate to create it with different tools before serving it to you, the end user. We’re always adding new info and images when we release features and publish resources, and inevitably, after a while, it starts to get bloated and heavy. After some recent synthetic testing, we saw that the response times from some areas are not where they should be. Specifically, we noticed a 600ms latency across the globe on average.

Now, you probably know that’s not ideal. But what does that latency really mean? Where’s it coming from? Let’s break down the timeframes and processes for an end-user to see our web server into some simple, readable components.

Connect Time:

The “Connect Time” metric refers to the time taken by a synthetic request to establish a connection with the target server. It measures the duration from when the request is initiated to the moment a connection is successfully established.

Processing:

The “Processing Time” metric in synthetic requests refers to the time taken for the server to process the request and generate a response. It measures the duration from when the server receives the request until it completes processing and sends the response back to the client.

Resolve:

The “Resolve Time” on synthetic requests refers to the time taken by the system to resolve the Domain Name System (DNS) for the requested Uniform Resource Locator (URL).

Transfer:

The Transfer Time for synthetic requests refers to the time taken for data to be transferred from the server to the client while executing synthetic tests or requests. It measures the duration from when the server starts sending the response to when the client receives it. The transfer time includes the network latency, data transmission time, and any processing delays in the server or client.

So using these metrics, let’s look at our numbers for the average response time across all synthetic tests.

| Metric | Time (ms) |

|---|---|

| Connect Time | 50 |

| Processing | 158 |

| Resolve | 150 |

| Transfer | 200 |

| TLS | 53 |

Not great. To tackle this, we could just throw a CDN at it and hope for the best. However, it’s better to break down what’s happening, understand the problem and the solution properly, and make dang sure we’re gonna go fast.

We chose AWS CloudFront CDN, which has multiple speed-oriented functions. The first we want to take advantage of is dynamic acceleration. AWS documentation describes dynamic acceleration as follows:

“Dynamic acceleration is the reduction in server response time for dynamic web content requests because of an intermediary CDN server between the web applications and the client. Caching doesn’t work well with dynamic web content because the content can change with every user request. CDN servers have to reconnect with the origin server for every dynamic request, but they accelerate the process by optimizing the connection between themselves and the origin servers.

If the client sends a dynamic request directly to the web server over the internet, the request might get lost or delayed due to network latency. Time might also be spent opening and closing the connection for security verification. On the other hand, if the nearby CDN server forwards the request to the origin server, they would already have an ongoing, trusted connection established. For example, the following features could further optimize the connection between them:

- Intelligent routing algorithms

- Geographic proximity to the origin

- The ability to process the client request, which reduces its size"

This is going to handle our large TLS connection overhead. When you connect to a website, the first thing it tries to do is secure the cipher and connect to the endpoint. Because we were hosted in N. Virginia, the request was:

-

(1) going into the USA,

-

(2) requesting the TLS connection and then,

-

(3) the response times were being carried back, based on the distance traveled.

TLS has multiple handshakes to retrieve the cipher and the established encrypted connection.

By relying on AWS dynamic acceleration for connectivity, a TLS request gets handled near the user based on the closed CDN server instead of having to connect to the N. Virginia AWS server. So, we shave off some serious time from the TLS back-and-forth. AWS cleverly routes the rest of the traffic internally and securely, so even dynamic content is taken care of.

So, let’s start by binding the application load balancer that serves our website directly into Cloud CDN to leverage the nice speed increase with connection requests.

Take into consideration connection times: again, dynamic acceleration handles the connections by routing everything through the internal services of AWS. So, from a client perspective, the connection state is being handled against the most locally available CDN location to the user. From here, we shave off some major downtime while retaining the dynamic nature of the website. Even without enabling CDN caching and ensuring the website is still being served from our load balancer, we notice some serious gains in speed based on the connection times alone.

| Metric | Time (ms) |

|---|---|

| Connect Time | 19 |

| Processing | 168 |

| Resolve | 25 |

| Transfer | 51 |

| TLS | 52 |

The transfer times show a decent increase because of AWS smart-routing the data internally. The only thing we are doing here is serving the dynamic website state. The connections are being handled at the edge by the CDN and then routed into our AWS load balancer, which handles all the heavy lifting and everything behind the processing.

The only issue here is the processing time, which has gone up. Not enough to really be a problem, as we’ve carved off a huge amount of time via improved transfer, connect, processing, and resolve times. While we’ve reduced the overhead on our TLS, connection, and transfer times, we’ve now added a CDN in the middle that has to process our website data. Hence the increase.

If your website is entirely dynamic and you want to stop here, that would be totally fine — everyone’s already getting a faster connect time, so everyone’s happy.

But we want to go fast, right?! So let’s look at caching static assets against the CDN.

Caching static assets against CDN edge servers means taking all your non-dynamic content and making a copy around the world at the edge, so when people make a connection request, they retrieve all the static assets locally. Files like jpg, js, png, gif, etc., are often quite large and require a lot of transfer and caching. So, after identifying the necessary cache files, we ensure that the CDN caches these at the edge, while ensuring additional dynamic content is not being cached: We don’t want people to miss out on, say, Raygun’s latest API endpoints!

This is where it gets tricky. First, we spend some time classifying the necessary non-cacheable assets. The tricky part is in accurately identifying the cacheable assets. As stated earlier, pictures, movies, static JavaScript, etc., are all fair game for this. Then we define these as ‘do not cache’ in the CDN and basically let the CDN take care of the rest.

From what’s left, we can choose the assets we want to cache and which ones are dynamic. In Raygun’s case, we want to ensure that nothing that’s changed regularly is being cached. We can use AWS Edge Locations for this particular use case. AWS explains as follows:

“With CloudFront caching, more objects are served from CloudFront edge locations, which are closer to your users. This reduces the load on your origin server and reduces latency.”

By enabling this, we can ensure that our cached assets are nice and close to the people who are looking at them. No longer needing to serve the vast majority of the website from a centralized location means that our processing time drops dramatically.

Combining this with our excellent TLS improvements, we achieve significant speed gains across the board.

| Metric | Time (ms) |

|---|---|

| Connect Time | 2.66 |

| Processing | 10.4 |

| Resolve | 21.3 |

| Transfer | 11.4 |

| TLS | 3.3 |

And just like that, we’ve significantly reduced the time to serve for customers around the world!

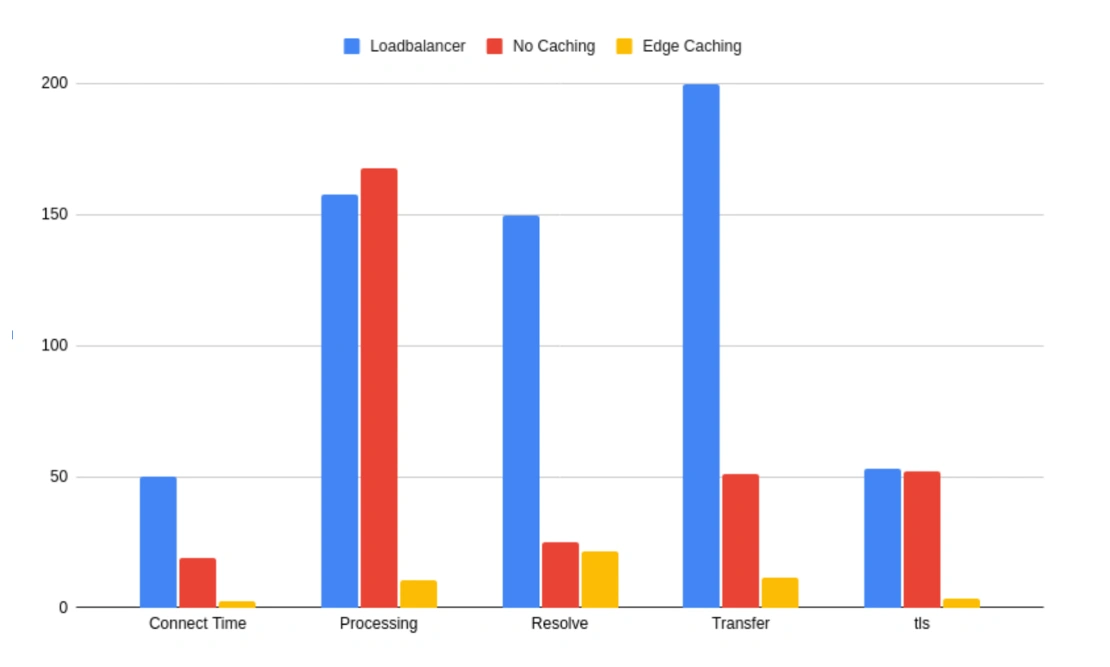

| Metric | Load balancer | No caching | Edge caching |

|---|---|---|---|

| Connect Time (ms) | 50 | 19 | 2.66 |

| Processing (ms) | 158 | 168 | 10.4 |

| Resolve (ms) | 150 | 25 | 21.3 |

| Transfer (ms) | 200 | 51 | 11.4 |

| TLS (ms) | 53 | 52 | 3.3 |

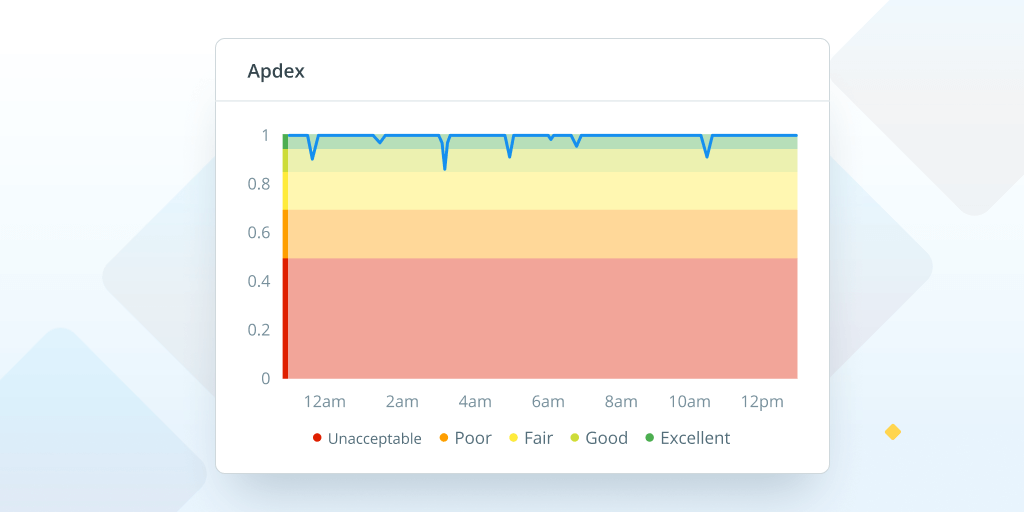

Finally, let’s look at our results.

Here’s a full comparison of where we started, and where we got to.

The difference is striking, to say the least. Ensuring that the processing time is cached at the edge allows us to massively reduce the processing time as well as the TLS resolution time. These are critical when it comes to serving content. Dynamic content still serves from our AWS USA location, but the overarching speed benefits from processing the majority of the assets locally to the client connection means we achieve significantly faster connection speeds. This leads to really snappy and efficient websites and, of course, happy customers as they browse without having to wait those few precious seconds for a page to load.

The difference is striking, to say the least. Ensuring that the processing time is cached at the edge allows us to massively reduce the processing time as well as the TLS resolution time. These are critical when it comes to serving content. Dynamic content still serves from our AWS USA location, but the overarching speed benefits from processing the majority of the assets locally to the client connection means we achieve significantly faster connection speeds. This leads to really snappy and efficient websites and, of course, happy customers as they browse without having to wait those few precious seconds for a page to load.

Mixing cached assets with dynamic content allowed us to continue serving our entire structure without changes to the internals, simply overlaying an entire CDN over the top to do what we set out to do: Go fast. Really fast.

This process took a little legwork but paid off heavily, ensuring ultra-secure, high-speed routed traffic with minimal overhead, while providing extraordinary speed boosts for users around the world. Check out the zippy new site for yourself!