Transitioning from lz4net to K4os.Compression.LZ4

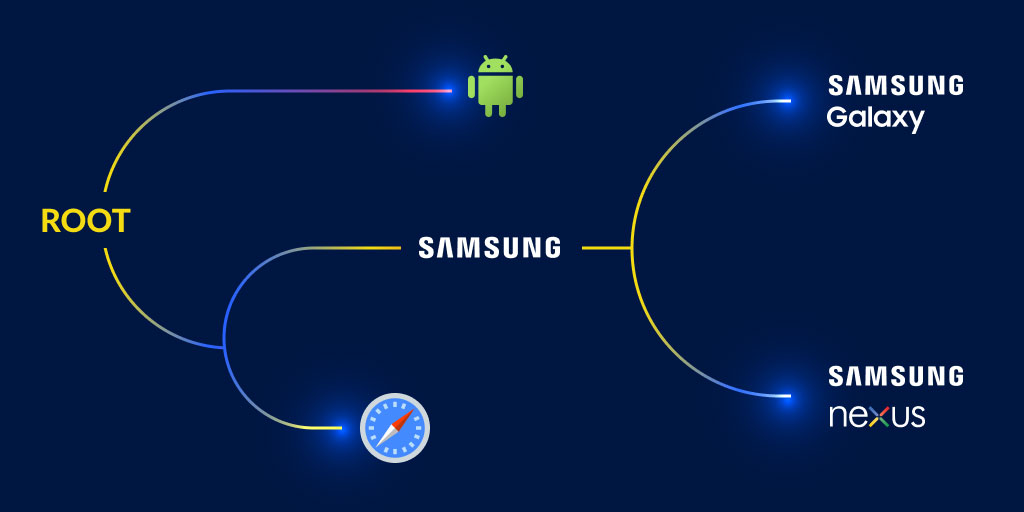

Posted Nov 24, 2023 | 8 min. (1538 words)At Raygun, we’re processing billions of events per month for our customers, so it’s well worthwhile looking for the most efficient data storage solutions. Way back when we started out, we chose lz4net to store data, which served our purposes well for many years. As we grew, though, we realized this was getting expensive, and was starting to undermine our business model. This post is focused on how we made the switch to the K4os.Compression.LZ4 rewrite, attaining significant performance gains. It’s also about the challenges we encountered along the way (namely, the discovery that our performance gains were absent in the Legacy library, and we needed to support 2 decompression implementations!)

Here’s the full story.

The initial compression strategy with lz4net

Our initial compression setup with lz4net was pretty straightforward. We compressed data into bytes and then wrapped it with a header. This header contained two crucial pieces of information:

- A ‘magic’ header: This unique identifier allowed us to recognize the compressed data.

- The expected length: This helped in ensuring data integrity during decompression.

+----------------+----------------+----------------+

| 4 bytes | 4 bytes | x bytes |

| (Magic) | (Length) | (Payload) |

+----------------+----------------+----------------+

This header structure, as illustrated above, was not just a random design choice. It was a strategic decision made to ensure that the data was both identifiable and verifiable. By having a distinct ‘magic’ header, we could quickly determine the nature of the compressed data, while the length ensured that the data was complete and hadn’t been tampered with. The foresight in this original design from years ago would turn out to be a lifesaver!

Using file headers is nothing new, but it is a great way to denote a lot of information about the file in question. ZIP for example has a large header.

Let’s take a look at the ZIP file format as an example of how headers are used in widely-adopted standards.

| Offset | Bytes | Description |

|---|---|---|

| 0 | 4 | Local file header signature (0x04034b50 which translates to PK.. in ASCII) |

| 4 | 2 | Version needed to extract (minimum ZIP specification version needed to extract the file) |

| 6 | 2 | General purpose bit flag (various flags that can affect the extraction process, like if the file is encrypted, if compression was optimized for speed or size, etc.) |

| 8 | 2 | Compression method (e.g., 0x0000 for no compression, 0x0008 for DEFLATE) |

| 10 | 2 | Last mod file time (the last modification time of the file, in MS-DOS format) |

| 12 | 2 | Last mod file date (the last modification date of the file, in MS-DOS format) |

| 14 | 4 | CRC-32 (a CRC-32 checksum of the uncompressed data) |

| 18 | 4 | Compressed size (size of the compressed data) |

| 22 | 4 | Uncompressed size (size of the original uncompressed data) |

| 26 | 2 | File name length (length of the file name that follows) |

| 28 | 2 | Extra field length (length of the “extra field” that might follow the file name) |

| 30 | variable | File name (the actual name of the file, variable length as specified above) |

| … | variable | Extra field (optional field, can be used to store additional data about the file) |

Much like our own compression strategy, the ZIP format demonstrates the power and necessity of well-defined headers in data management. These headers not only ensure data integrity but also provide crucial metadata that aids in processing and interpretation. As you’ll see in the process of our transition, such foundational design choices play a pivotal role in ensuring adaptability and future-proofing our systems.

The transition process

After thoroughly evaluating K4os.Compression.LZ4 to ensure it met our needs, we began the switch.

Switching compression methods might sound daunting. Our immediate thought was:

Oh, let’s change the API to include the compression information.

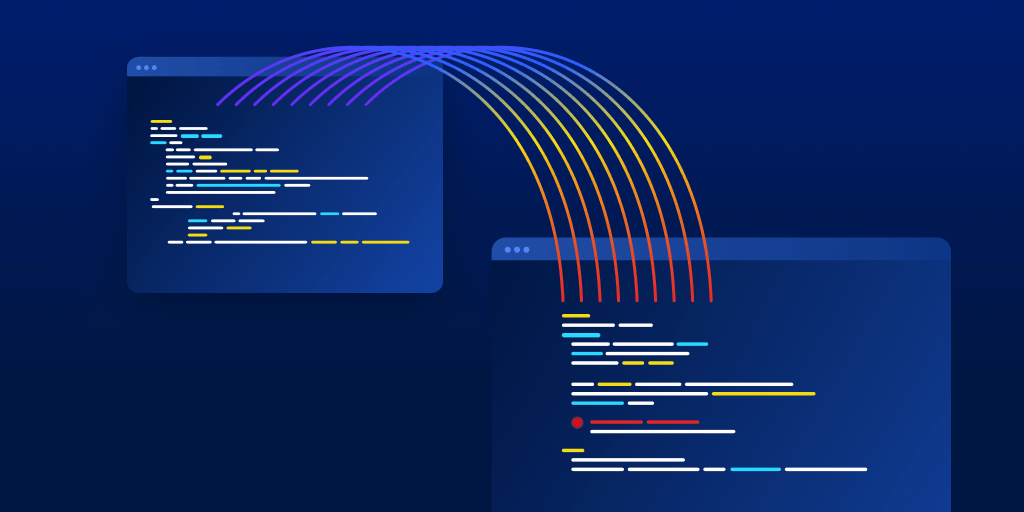

That would entail changes everywhere to store the information and keep it handy when decompression occurs. Thankfully, we were able to leverage the header to identify the compression. To do this, we used a new implementation of our compression class.

public class OldCompression

{

// Magic = OLDC

private static readonly byte[] Magic = { (byte) 'O', (byte) 'L', (byte) 'D', (byte) 'C' };

...

}

public class NewCompression

{

// Magic = NEWC

private static readonly byte[] Magic = { (byte) 'N', (byte) 'E', (byte) 'W', (byte) 'C' };

...

}The new class defined its own Magic header. For example, the old implementation has OLDC while the new implementation has NEWC

After implementing the Compress and Decompress methods, we added a check to determine the compression method is valid for the class.

// Method to validate the payload based on its header

public bool IsValidPackage(byte[] payload)

{

// Verify the payload length is at least 8 bytes

if (payload == null || payload.Length < 8)

{

return false;

}

// Verify the first 4 bytes match the Magic value

for (int i = 0; i < Magic.Length; i++)

{

if (payload[i] != Magic[i])

{

return false;

}

}

var compressedLength = BitConverter.ToInt32(payload, 4);

// Verify the expected total length of the payload is what we received

return payload.Length == 8 + compressedLength;

}This allows us to check the incoming payload to be decompressed is valid for the implementation and return it.

public ICompression GetCompression(byte[] payload)

{

foreach (var implementation in _compressionImplementations)

{

// If the payload is valid for this implementation then return it

if (implementation.IsValidPackage(payload))

{

return implementation;

}

}

throw new InvalidOperationException("Unknown compression format");

}With these changes in place, we deployed the service, ensuring that payloads compressed using the old method continued to function seamlessly.

With this confirmed, we updated the compression to the new implementation for a seamless transition.

The results

While the compression result is relatively similar and there are no major data savings, for our needs it’s much faster and uses a fraction of the memory.

Using BenchmarkDotNet to test the difference between the two implementations, we get the following results:

| Method | Mean | Error | StdDev | Ratio | Gen0 | Gen1 | Gen2 | Allocated | Alloc Ratio |

|------------- |----------:|----------:|----------:|------:|---------:|---------:|---------:|-----------:|------------:|

| Old_Compress | 45.779 us | 0.7638 us | 0.7145 us | 1.00 | 333.3130 | 333.3130 | 333.3130 | 1044.56 KB | 1.00 |

| New_Compress | 8.951 us | 0.1443 us | 0.1350 us | 0.20 | 1.8768 | 0.0153 | - | 23.03 KB | 0.02 |

From the table, it’s clear that the New_Compress method is both faster and more memory efficient than the Old_Compress method. It’s 5 times faster and uses only 2% of the memory that the old method uses. This showcases the significant performance and efficiency gains achieved by transitioning to the new compression method.

While for decompression we get:

| Method | Mean | Error | StdDev | Ratio | RatioSD | Gen0 | Gen1 | Allocated | Alloc Ratio |

|--------------- |---------:|----------:|----------:|------:|--------:|-------:|-------:|----------:|------------:|

| Old_Decompress | 3.986 us | 0.0782 us | 0.1146 us | 1.00 | 0.00 | 1.2360 | 0.0458 | 15.17 KB | 1.00 |

| New_Decompress | 3.804 us | 0.0270 us | 0.0240 us | 0.95 | 0.03 | 1.1559 | 0.0458 | 14.16 KB | 0.93 |

From the table, the New_Decompress method is approximately 5% faster and uses around 7% less memory than the Old_Decompress method. While the gains for decompression are minimal compared to compression, it’s still great to see a gain.

So, it’s safe to say the transition to K4os.Compression.LZ4 has been a resounding success. Not only have we maintained data integrity, but we’ve also set ourselves up for future flexibility. Our APIs can now easily adapt to any new compression methods we might adopt in the future.

Staying flexible and adaptable is key. Our transition from lz4net to K4os.Compression.LZ4 is a testament to this philosophy. As we continue to optimize our data handling processes, we’re confident that this new setup will serve us well for years to come.

Takeaways

Transitioning from one technology or method to another is often met with apprehension, especially when data integrity is at stake. Our journey from lz4net to K4os.Compression.LZ4 was not without its challenges, which gives us several key takeaways.

Firstly, the importance of forward-thinking design. Our initial decision to use a ‘magic’ header in our compression strategy, though seemingly simple, proved invaluable. It allowed for a seamless transition, ensuring that older compressed data remained accessible even as we adopted a new compression method. This highlights the significance of building systems with adaptability in mind, even if the immediate benefits aren’t apparent.

Secondly, benchmarking and thorough testing are crucial when making such transitions. By comparing the performance of the old and new methods, we were able to quantify the benefits and ensure that the new method met our expectations. It’s essential to have empirical data to back up decisions, especially when they impact core functionalities.

Lastly, flexibility is paramount. In the ever-evolving world of technology, the ability to adapt and evolve is what sets successful systems apart. Our experience with K4os.Compression.LZ4 has reinforced our belief in staying agile and open to change.

While the technical aspects of our transition are noteworthy, the broader lesson lies in the approach. Embrace change, design with the future in mind, and always be ready to adapt. These principles will not only guide successful transitions but also ensure longevity and relevance in an ever-changing technological landscape.