AI engineering for AI Error Resolution

Posted May 22, 2024 | 11 min. (2151 words)Smart engineering teams are working out how to use Large Language Models (LLMs) to solve real business problems. At Raygun, we’re no exception, and we’re committing our time and effort to developing AI software applications that bring value to our customers.

Our first AI-powered release is AI Error Resolution (AIER), a novel Crash Reporting feature that takes debugging with ChatGPT to the next level.

We know that LLMs have already dramatically increased software engineers’ productivity. However, unless these tools are integrated into your workflow, you waste critical time explaining the details in your prompt—especially when the alarms are sounding at 3 a.m.!

AI Error Resolution preloads your prompt with all the relevant data around the failure and the design of your system, instantly incorporating all the information Raygun captures from the stack trace, environment, and code, sent to the LLM of your choice. This returns fine-tuned AI-powered actions on how to rapidly resolve your error in production–and get back to sleep!

A new field of LLM-powered (or AI) software engineering is here to solve complex, real-world problems, shifting from theoretical and experimental to practical and impactful applications.

LLMs like the GPT series (Generative Pre-trained Transformer) have started to reshape industries like customer service, content creation, and software development. With the ease of integration provided by APIs, developers can incorporate advanced AI capabilities into applications without having to train their own models. These APIs allow direct interaction with pre-trained models, offering responses from text generation to complex problem-solving insights.

We’ve witnessed major innovations in how these models are applied and interacted with through libraries and frameworks like Python’s LangChain, which allows developers to seamlessly query LLMs and integrate their outputs into broader systems. We’re also seeing memory-context methods like Retriever-Augmented Generation (RAG) which enhance the depth and accuracy of LLM responses by dynamically retrieving and synthesizing information from large data sets during inference. This helps in creating more context-aware responses, which are critical in applications requiring nuanced understanding and adaptation to changing data. AI agents and tools that manage and optimize the interaction between these models and the user have further streamlined the application of AI, making it more efficient and accessible across various sectors.

In this article, we’ll bring you on the technical journey of engineering Raygun’s new AI-powered feature. If you’re a software engineer and you think that AI Engineering has nothing to teach you, read on! You’ll see that skilful software engineering still matters while interacting with AI components.

Not AI, Again?!

Over the last 20+ years, AI has produced several hype cycles, each driven by technological breakthroughs that promised revolutionary changes but mostly fell short. Early waves focused on searching algorithms like A* or Minimax, which rivalled humans in strategic games like chess. This was followed by artificial neural networks (ANNs) and deep learning, which significantly advanced image recognition. Now we have LLMs, with enhanced natural language processing capabilities and the potential to automate complex linguistic tasks.

While the previous waves led to inflated expectations, the core innovations from these cycles eventually became standard tools in software engineering. This “AI is the frontier” phenomenon forms a pattern where AI pushes technological boundaries, with each breakthrough eventually setting a new baseline for future innovation.

Since their release, ChatGPT and other AI chatbots have revolutionized debugging, showing an understanding of a programmer’s intent that comes from their training on diverse coding datasets. LLMs offer more than just syntax corrections, suggesting functional improvements and alternative coding strategies, streamlining the debugging process and enhancing the development workflow. Crucially, their interactive nature allows for conversational debugging and iterative refinement of code and solutions, making them more user-friendly for human developers. This is a big leap forward from traditional tools, promoting a more intelligent and usable approach to programming challenges.

Determining requirements for AI Error Resolution

When our engineering team started using ChatGPT in November 2022, we immediately saw how we could use it to troubleshoot code issues. As builders of Crash Reporting solutions, we soon started thinking about preloading all the relevant error data directly from Raygun to ChatGPT so we wouldn’t need to spoon-feed all the context to the LLM to produce useful responses.

During Raygun’s AI Week initiative in May 2023, a cross-functional team of 7 Raygunners rapidly developed the first prototype of AI Error Resolution in 4 business days. This was a great learning experience that guided the way for a full development cycle.

It also helped with outlining the general requirements for developing this new AI product in earnest. Three standout features emerged as we looked deeper into this new kind of debugging tool.

REQ1: AIER on the error group–not the error instance

The resolution process should focus on error groups rather than individual error instances. This ensures that the resolution targets the underlying root cause, which is better represented by the error group. Individual error instances, although similar, may have minor variations that can introduce unnecessary noise and complicate the resolution process.

Addressing the error at the group level promotes more efficient and meaningful troubleshooting and resolution of the core issue. This also helps to improve the feature’s learning efficiency by helping it discern and adapt to patterns in errors, facilitates a cleaner data set for analysis, and enhances the predictive capabilities of preemptive error resolution strategies.

REQ2: AI with a customer’s own API key

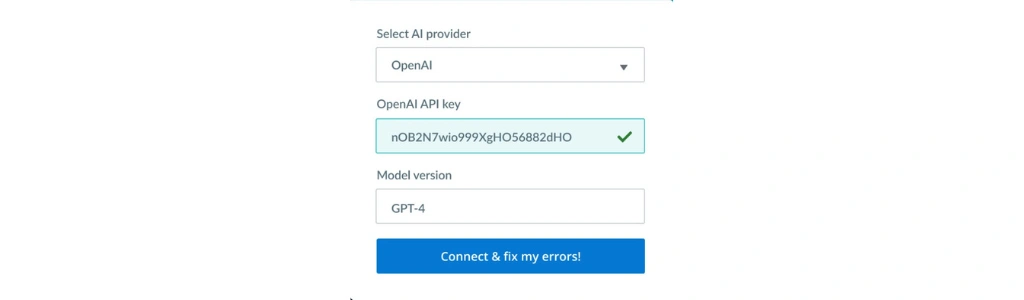

We determined early on that this feature needed to leverage third-party AI providers like the LLMs provided by OpenAI or Azure OpenAI. Importantly, a customer must provide their own API key for integrating with these third-party services. This matters because of the rapid rate of advancements in language models, meaning the most up-to-date versions could make the feature far more powerful.

Plus, when customer data is transferred to an external AI service provider, individual API keys enhance data governance and security. This gives customers greater control over their data interactions with AI services, allowing them to manage their own API endpoints. This includes the ability to adjust usage limits, monitor usage statistics, and directly manage data privacy settings.

REQ3: No data stored outside Raygun

All AI-generated chats, user messages, and interactions must be stored exclusively on Raygun’s own backend infrastructure, rather than on external AI provider systems. This requirement ensures that only the inference process occurs externally, while data storage remains under our control. This enhances security and privacy, as it minimizes the risk of sensitive information being exposed during data transfer or storage on third-party servers. It also ensures that all user data is governed by Raygun’s security protocols and compliance standards.

N.b. For customers running an OpenAI model, it’s helpful to note that as of March 2023, OpenAI no longer uses chat data to train their models.

Storing data on Raygun’s backend also facilitates better governance and auditability, allowing for detailed tracking and monitoring of data access and manipulation. This means we can adhere to stringent data protection regulations such as GDPR and HIPAA. In addition, by retaining all user interactions internally, we can implement customized security measures and access controls for specific customer needs and risk profiles.

Front-end development

Our approach to development for AI Error Resolution followed a methodical, incremental process to create a robust and developer-friendly tool.

Initially, we focused on establishing the core interface component. We explored various ideas but landed on a sidebar instantiated per each error group. This side-drawer was designed to provide immediate access to AI-driven chats and historical data, allowing the user to swiftly understand the progression and nuances of errors. The initial setup of the drawer was crucial for integrating contextual chat functionalities directly within the error analysis workflow.

To effectively handle a range of potential issues with third-party AI providers, like depleted credits, we implemented a specialized alert system. This system is designed to relay API errors from providers like OpenAI, so that issues like credit shortages are communicated clearly and promptly to the user. This helps maintain transparency and allows developers to quickly address and resolve any service interruptions.

Next, we focused on enhancing our onboarding for third-party AI providers to give our users a seamless and secure setup process. The onboarding interface includes a dropdown menu to select an AI provider, such as OpenAI or Azure OpenAI. As the user selects their provider, the interface dynamically presents initialization fields specific to the provider, such as API keys, deployment names, and LLM modes.

To ensure accuracy and security, each input field, like API keys and deployment names, undergoes a front-end verification process using pattern matching. For example, API keys for OpenAI must match the regex pattern ‘sk-’, ensuring that the input adheres to expected formatting standards before submission. If the verification succeeds, the system then performs a validation check by querying the AI provider to confirm the validity of the data entered.

Throughout this process, the interface provides real-time feedback to the user, detailing where and why failures occur on their inputs. This way, every piece of information is thoroughly verified and validated (V&V) before the user can proceed to the next step of the onboarding process.

Incorporating SignalR was critical to support real-time collaborative debugging. This enables dynamic, multi-user chat capabilities within the platform, letting teams communicate effectively in real-time within the debugging interface. This feature is particularly valuable in fast-paced development environments where team collaboration can significantly speed up issue resolution.

Technically, the system was constructed using a blend of HTMX, MVC architecture, HTML helpers, and CSS utility classes. HTMX allowed for creating responsive and interactive elements without extensive JavaScript, which is particularly advantageous in maintaining and scaling the application. This architecture was chosen to align with existing systems for smooth integration and to provide developers with a familiar yet powerful toolset.

Back-end development

We strategically planned and executed the back-end architecture to ensure that each component enhances AI Error Resolution’s overall functionality and scalability.

Initially, we established a robust internal API framework to manage AI chats and interactions with AI providers. This meant that different parts of our system could communicate seamlessly with AI functionalities without direct dependency on external AI systems. By encapsulating AI interactions within internal APIs, we improved security and maintainability, setting a strong basis for future integrations.

With our internal APIs established, we were now able to integrate third-party LLMs like OpenAI and Azure OpenAI. We took a modular approach to these integrations, with these AI providers interfaced via our structured APIs. This allows for the flexible addition or modification of AI services as needed, and adheres to the principles of separation of concerns for future scalability and adaptability.

Next, we focused on dynamic prompt engineering. By carefully crafting the AI-powered prompts based on the structured error group information processed by our back-end, we optimized the queries sent to the AI models. This process meant that the prompts were highly relevant and structured to elicit the most useful responses from the AI, significantly improving diagnostic capabilities.

Lastly, we enhanced our real-time communication capabilities by integrating SignalR, supported by a Redis backplane. This setup not only facilitated scalable API interactions across multiple servers and clients but also included Redis-backed concurrency controls to manage load and maintain data consistency. This robust infrastructure was crucial for handling high throughput and providing a seamless real-time experience, crucial for collaborative debugging and monitoring within our AI-driven error resolution system.

Launch!

Before deploying AIER to real customers, we conducted meticulous, phased testing to guarantee its stability and effectiveness. Initially, we ran alpha testing internally, leveraging our own developers as the first testers. This allowed us to quickly identify and address any fundamental issues in a controlled environment, refining the system based on immediate feedback from users who are deeply familiar with the underlying technology and objectives.

Following successful internal testing, we extended an invitation to a small group of beta-tester customers. This external round of testing helped us obtain real-world feedback and understand how the system performed under diverse usage conditions. It also allowed us to gauge user satisfaction and further tweak the system based on feedback from actual users. The insights of our beta testers were instrumental in making the necessary adjustments before a wider release.

Our initial public release included integration with OpenAI only, so we could focus on a smooth rollout and stress-testing our system before expanding to other providers. We’ve now expanded to include Azure OpenAI, and made ChatGPT4o the new default. Each release has been accompanied by iterative improvements, driven by user feedback and enhancing functionality and user experience. We’ll continue to extend and improve this product and pursue new possibilities. We believe that AI is the future of debugging, and will increase the quality of software experiences for end users.

AI Error Resolution is an enhancement of Raygun Crash Reporting. To see it in action, sign up for a free 14-day trial of Crash Reporting — no credit card required. Existing Crash Reporting customers can start generating solutions right now!