AI realism (part one)

Posted Mar 20, 2024 | 10 min. (2023 words)Emotions are running high about AI technologies. In this 2-parter, I do my best to make a rational case on the reality of AI, and how we can respond to it. This is part one; part two next week.

We seem to be struggling to have pragmatic discussions about advancements in Artificial Intelligence. It’s hard to hear calmer voices over the detractors and breathless enthusiasts. Today, I want to make a reasoned, evidence-based case for the potential of this technology, glance at present and future applications, and offer some practical examples for implementing AI within an organization.

Now, let me preface by saying that these tools are far from perfect. There are valid concerns about the hallucinations, the confidently inaccurate answers, the ethics, and the shortcomings. Some are drawing analogies to crypto, predicting an explosion of scams and frauds, and claiming that there’s no real substance there.

While cynicism about crypto has proven fairly valid, this moment seems less like cryptocurrency’s self-promoting hype machine and more of a paradigm change like the internet. After all, don’t forget how the internet started out. Sluggish dial-up speeds and disastrous usability, often waiting hours just to watch one janky download. It was painful, but we did it anyway, because everybody could see the outlines of what made this amazing. And that’s exactly, in my opinion, where we are with AI right now. We have a lot to work out still, but we can all see the promise.

This is a very different world from the one we lived in at the birth of the internet, or even the crypto gold rush of 2017. What’s different? Well, we stopped the global economy to respond to a pandemic. We’re now dealing with the fallout of that. Investors have largely pulled back in the market; valuations have been adjusted. People who raised capital now face much more significant challenges in getting a follow-on round. The pain of higher interest rates is flowing through to even the smallest essentials with inflation. Globally, there’s renewed emphasis on productivity and doing more with less to pay off our accumulated debt.

Then, just as everything looks quite dire, we’ve introduced a revolutionary technology that can expand the productivity of the human race massively.

Now, that may sound too good to be true. Let me explain why this could be such a radical solution.

Early in his career, Jeff Bezos worked for a hedge fund. He was doing fine, but obviously had bigger ambitions. One day, he read that the internet was growing at 2300% per year. He knew immediately that there were huge opportunities in an environment with that kind of growth rate. He quit the hedge fund. That was 1994.

The following year, Bill Gates pivoted Microsoft dramatically towards the internet. Mature readers may recall how Microsoft in the nineties was about as terrifying a juggernaut as today’s Google, Amazon, and Facebook combined. They basically controlled everything that happened on any given computer. And yet, Gates looked at the rise of the World Wide Web and decided they needed to change direction. He sent out the “Internet Tidal Wave” memo, calling it “the most important single development to come along.”

Bezos and Gates are in their current positions because they caught on early enough to take advantage of the ascension of the online world.

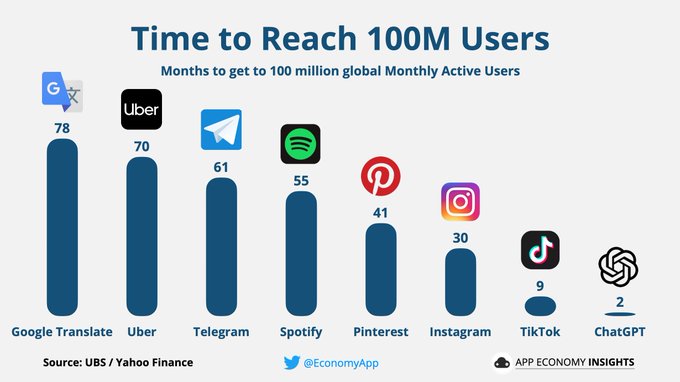

The internet took until roughly 1997-98 to hit a hundred million global users.

I believe that AI will dwarf the impact of the internet. In the case of ChatGPT, we went from zero to a hundred million users in under three months, not years. Everything is speeding up, and we need to be catching this early and seizing our moment to pivot.

Image source @EconomyApp

Image source @EconomyApp

Looking at tools like CoPilot, it’s already clear how we’ll be able to get more information more quickly, generate analysis, spot trends, and combine datasets. We can cut hours of busy work and focus on the higher-value things that humans remain uniquely good at.

Another simple example is Microsoft Teams’ automatic transcription. Previously, a team member took notes during meetings, then edited and filed them. The results were slightly different depending on who took the notes and what they perceived as being the important parts of the discussion. Now, the team can stay present in the meeting, we remove the risk of misinterpretation, and so on. These things boost our ability to get work done right now.

These are helpful, but not revolutionary. In 1997 terms, I remember that it was a big deal when Microsoft Word shipped with the ability to insert a hyperlink in a document. In hindsight, the developments in the mid-to-late nineties weren’t all that groundbreaking. It wasn’t until after the .com bubble that tech got seriously competitive and built some of the massive advancements that we use today.

It’s still early days. We’ll see a lot of stuff being shipped, and most of it won’t last. Part of the problem right now is there’s kind of a fog of war. There are so many options to keep track of and they’re changing so fast, and it’s impossible to know what’s just hype.

A lot of people think of AI as simply ChatGPT, and that’s their entire concept of this. ChatGPT is a large language model. You ask questions, or prompt the model, and it gives you an answer. Skeptics point out that this is just a “fancy auto-complete”. It’s not really thinking, it’s not really synthesizing anything. And that’s mostly true. However, that’s also a fairly naive, non-technical evaluation. The reality is that the large language model is a breakthrough based on transformer technology, but that’s just one piece of these systems.

Objectively, we all have something that’s similar to an LLM inside our own brain. What we don’t have though, in AI, is consciousness, which is what decides what to prompt. The human brain has all of these other subsystems that we can’t replicate (yet), but the LLM is a pretty powerful piece of the puzzle.

However, there are frameworks out there like LangChain, that sit above an LLM and perform higher-level orchestration. Let’s say we want to build a virtual sales development rep. We’d tell it to come up with criteria, build a list of prospects, contact them all at a particular time, wait a certain amount of time to follow up, etc. You could ask the LLM, what are the steps for a successful SDR campaign? Then, you can have a series of agents that sit above that, so that effectively each answer becomes the input for the next step in the chain. At that point, we’re getting much more than a plain-text set of instructions. We can orchestrate a series of autonomous actions. LangChain can connect things into a smarter, more sophisticated system, making it much more approachable for “normal” people to build their own websites, scripts, and tools.

This is where you could give the system rules and understanding. You hear people say, look, these tools can’t do reasoning. Fair enough. There are, however, algorithms that use a chain of reasoning, that can interpret that if x and y are true, that implies this. And that’s where a lot of work is going. It doesn’t mean the computer is thinking, but it’s a hell of a lot more than auto-complete.

The tech around this right now is still unvarnished, but it’s being worked on even as I type this. Like the internet itself, it’s fiddly and difficult to work with, but there is gold in these hills for the people who figure it out.

So, what should I be doing right now?

While these are still the first few snowballs of a much bigger avalanche, there’s exciting stuff to act on and try out right now, out-of-the-box. Here’s what I recommend in the here and now.

Expert review: We sometimes do custom terms with larger enterprise customers, and that usually means we need to review some of the wording in those contracts. Now, I can read a legal document and have a pretty good understanding, but there are usually things I’m not sure about. One came through recently, and our lawyers quoted $4,000 to review two clauses. So I just put those clauses into ChatGPT, and asked for the risks and ramifications. There’s $4,000 I didn’t have to spend. To be clear, this is at your own risk; obviously legal documents are sensitive and sometimes you just need the peace of mind. But start considering what expertise you can automate and then fact-check.

Improve communications: One of the most attainable uses of AI is to review communications. People will upload a drafted email, social media post, a product description, and ask what could be improved, can you reduce the word count by 50%, and so on. We’re also using image generation within the design team, experimenting to get it to adhere to our brand guidelines.

The cost of content creation, especially generic content, has plummeted. Some people have seen an arbitrage opportunity that will only exist for the next 12 months, and just generate masses of low quality content to capture some value. That’s not sustainable, but what is sustainable is integrating generative tools into your existing tasks, guided by creative professionals.

Form habits early: The easiest steps are obvious ones. Get a ChatGPT account (I’ve set the OpenAI chat webpage to be an “app” on my taskbar, so that it’s visible and accessible). Use their latest model – it’s the best there is (right now). Don’t wait for it to be perfect or mandatory. Start trying things, changing your habits, and shifting your muscle memory.

Previously, to solve a problem, I’d determine what I wanted to achieve, and then plan out the execution in steps, Googling any I was unsure of. It takes time and discomfort to bypass that habit and go straight to asking for the final output. It’s a big shift, because it’s not how anything has worked previously. A fundamental adjustment to how we all think and behave.

How can we maintain security?

As I say, things are moving fast right now, and velocity and safety are often at odds. Many organizations have opted out of using these models at all to protect sensitive information.

You’re probably familiar with Europe’s GDPR requirements, which grant the right to be forgotten; to request everything an entity knows about me and require them to remove it. Right now, you can’t remove data from a large language model. OpenAI spent millions training that model, and removing data would mean starting again.

So how can you use these tools securely? If you’re highly safety-conscious, you can self-host a model. However, you need some grunty hardware to run it. From the outset, we resolved firmly at Raygun that none of our customer data would go to OpenAI. One of the outcomes of our AI-week is we’re building a fairly powerful server to self-host some models with our internal data. Worst-case scenario, we can just delete the model. There are a lot of these open-source models; they’re all different. It’s harder than just calling OpenAI, but you also get to learn about how to train them and how they actually work.

Stay curious

No one’s an expert right now. AI is recursive, and it’s changing every day. It is helping accelerate its own development. People say it can’t think, and they’re right. However, we’ve built this massive database of everything that it can infer. So it can help us identify things that maybe aren’t as obvious to a human, that can be connected together to achieve an outcome.

It starts with writing an email faster, but this quickly gets us thinking bigger. How can we actually, dramatically improve the effectiveness of our workplaces? Honestly, this is destined to impact the way we work regardless. Everybody is going to face a challenge here over the next few years. And the more they understand, the better prepared they can be for that. We should all keep pushing to educate ourselves and each other, encourage, and experiment. More on that, and more on Raygun’s AI-driven projects, in Part 2.